Human After All?

OpenAI's new o3 model could represent a watershed moment where AI begins to match or exceed humans on knowledge work. At least for now, human intelligence is much cheaper than AI.

OpenAI’s o3 model rivals or exceeds humans on the ARC-AGI and Frontier Math benchmarks. I estimate OpenAI spent $1.6mn on inference for this performance.

High efficiency o3 costs ~$850/hr. o3 costs would need to decline 75% - 99% before AI could widely replace jobs like offshored IT and BPO services.

I think these cost cuts are achievable in 2-5 years considering the trajectory of GPT-4 price cuts, though first we need to see whether o3 actually generalizes.

We live in exciting times.

If you remember my first post to this substack, After Training your AI, Give it Time to Think, you may be expecting me to take a victory lap after the OpenAI o3 model announcements. You’d be right too - I think I called this (fairly obvious, to be fair) scaling trend correctly!

OpenAI’s o3 model was introduced as the culminating announcement of its 12 Days of OpenAI campaign. The model represents a new breakthrough: it demonstrated a vast improvement in performance on generalized reasoning tasks achieved through a mixture using massive quantities of test-time compute and likely some secret sauce of architectural improvements by OpenAI.

With o3, OpenAI showed a step-function increase in performance on the ARC-AGI benchmark. o3’s accuracy on this benchmark rose to 76% - 88% from the prior high water mark of 25% - 32% accuracy set by the o1 model series. For comparison, here’s how ARC-AGI’s founder said humans performed on the ARC-AGI benchmark:

Another result that blew me away was o3 achieving 25% accuracy on the Frontier Math benchmark. I studied physics and math in college and I spent time studying number theory. I have no idea how I would begin to approach this example problem from the public Frontier Math benchmark.

Granted, this part of my skillset is very rusty, but my point is straight forward: if reasoning LLMs are getting to a point where they can solve 25% of this difficulty class of math problems, then there’s some seriously bright sparks of general reasoning abilities here.

I will not be convinced that o3’s reasoning and problem solving capabilities will generalize sufficiently to challenge humans in the workplace today. But I do strongly believe that we should be discussing what human labor will look like over the next, 1, 3 and 5 years considering there’s a possibility that o3 and other test-time compute strategy models may continue surprising to the upside on reasoning.

What’s in Fred’s Rotation Today

The Cost of General Reasoning AI Today

In a post o3 world, I think it’s fair to argue that artificial general intelligence for tasks where a ground truth can be reasonably established (e.g. math, programming, domains of knowledge work) may be coming much sooner than many of us had expected. It also might be much, much more expensive than human intelligence - at least at first.

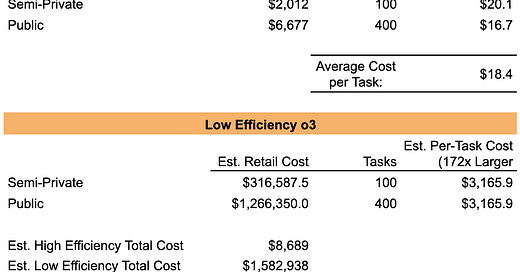

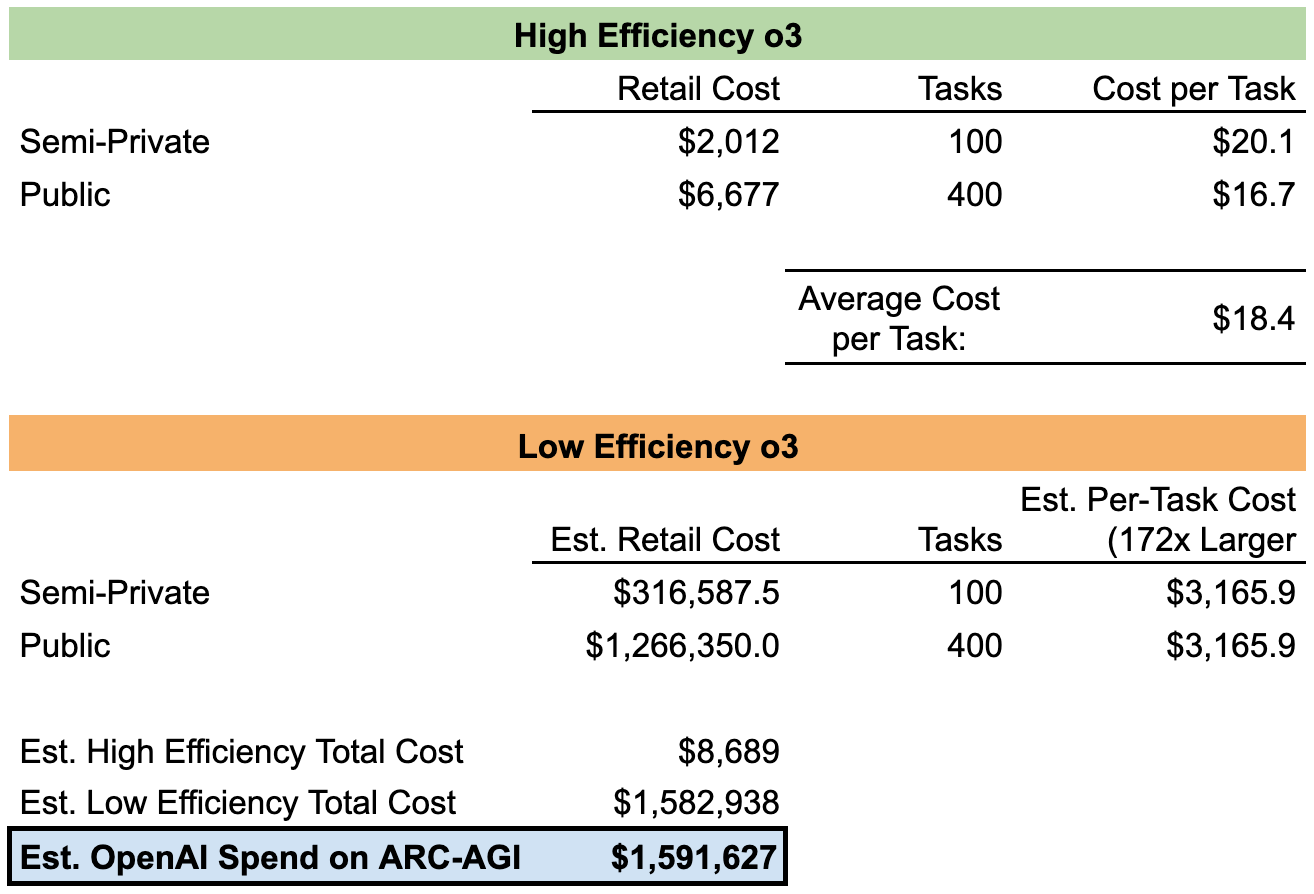

I’ll leave the deep learning technical analysis to the experts and instead talk about some of the economics here. Below is the cost and performance data published by François Chollet, the creator of the ARC-AGI benchmark.

I’m estimating that OpenAI spent around ~$1.6mn on inference to achieve its breakthrough results on the ARC-AGI benchmark when using its “low efficiency” o3 runs. I get to this number using the published retail costs for the high efficiency version of o3 and the footnote to this chart (included above) that states o3 high compute / low efficiency used ~172x the compute of the high efficiency version.

The cost per task analysis above tracks with the benchmark score vs. cost per task graphic published along with this announcement, which I’ve copied below for your reference.

Please do click through the sourcing link to check out the original writeup by François Chollet. It’s a fascinating read that’s accessible to anyone.

So, is Human Labor still Competitive?

For now, yes. My initial, instinctual take on the economic implications is that blue collar workers are probably the most resilient to AI disruption today. Perhaps we’ll start seeing wage competition from general reasoning AI among lawyers, software engineers, researchers, bankers, doctors and other skilled knowledge workers who exist within the highly compensated W-2 working class.

I had thought that low cost, repetitive offshore knowledge work labor would likely be the first to be disrupted by AI, but now I think this low cost labor is probably safe and resilient (for now) despite AI competition simply because it is so cheap.

I did a quick search for the hourly rates charged by business process outsourcing (BPO) firms across low-cost regions of the world and I found analyses suggesting that competent BPO services start around $12-$15/hr in India, $10-$12/hr in the Philippines, and that there are similar cost profiles in Pakistan and a few other regions.

I could be quite off the mark since a lot of the data I found related to call centers and IT outsourcing, so feel free to correct me - I really appreciate feedback!

The point of this is to compare whether offshore labor still has a cost advantage vs. OpenAI’s o3 model, which I estimate costs at least ~$850/hr to run the cheaper high-efficiency version of o3 on productive, problem-solving work today. Perhaps o3 could be more cost efficient on easier to solve day-to-day problems vs. math and reasoning benchmarks, but I want to be a bit conservative since I don’t have access to the model yet.

I am still skeptical that o3 or any general reasoning models could be plugged into an enterprise today and begin displacing workers. I think a lot of software and data migration work will need to take place before AI can begin to displace people wholesale. I also think that work could probably begin this year and be accomplished within the next 2-5 years, even among the largest and most technologically lethargic enterprises.

How Much Longer do Offshore Workers Have?

If o3 can be deployed to replace offshored knowledge workers, OpenAI would have to bring down costs for o3 by ~99%. Realistically I think that if costs came down by ~75% then a lot of the market would start considering how to apply this technology in day-to-day work. Wouldn’t you pay a slight premium for an employee that never complains and is available 24/7/365?

I think these cost reduction profiles are within the realm of possibility over the next 2-5 years if OpenAI can find and implement optimizations that yield a similar cutting profile for o3 as it delivered with GPT-4.

When GPT-4 was released in March 2023 it launched with GPT-4 priced at $0.03/1K input tokens and $0.06/1K output tokens for the 8K token context window model, while the 32K context window model was priced at $0.06/1K input tokens and $0.12/1K output tokens. Today, the larger GPT-4-turbo model with a 128K token context window is priced at $0.01/1K input tokens and $0.03/1K output tokens, while the highly cost effective GPT-4o mini model is priced at $0.00015/1K input tokens and $0.0006/1K output tokens.

If I compare pricing for GPT-4o mini vs. the initial GPT-4 launch, OpenAI reduced GPT-4 model family costs by up to 99.5%. Even when comparing GPT-4-turbo vs. initial GPT-4 pricing, OpenAI reduced GPT-4 pricing by 67% - 83%.

This has been a core and consistent trend within cloud computing: as hyperscalers unlock economies of scale and optimize across both hardware and software, cloud computing unit costs rapidly decline.

That said, there is a risk in my mind that test time compute strategies may not be as ripe for software-layer optimizations since they rely upon expending massive quantities of compute to search and strategize over ever larger chains of thought. It could be cost optimizations for test time compute strategies will come primarily from hardware-level optimizations, in which case we’re back to waiting on Nvidia and others to innovate with inference technologies.